Graphics Pipelines for Next Generation Mixed Reality Systems

The Pipelines project is an EPSRC funded investigation into new graphics techniques and hardware. Our goal is to re-purpose and re-architect the current graphics pipeline to better support the next generation of AR and VR systems. These new systems will require far greater display resolutions and framerates than traditional TVs and monitors, resulting in greatly increased computational cost and bandwidth requirements. By developing new end-to-end graphics systems, we plan to make rendering for these displays practical and power-efficient.

A major focus of the project thus far has been on improving efficiency by rendering or transmitting only the content in each frame that a user can perceive — displaying a metamer to the target content. More detail on our work in this direction is given on the page for our paper Beyond Blur.

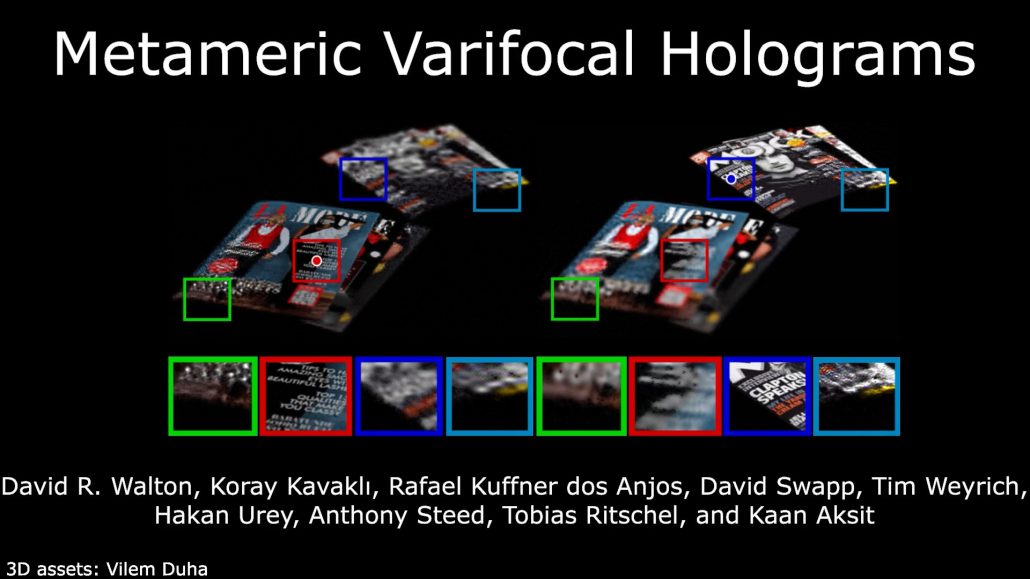

In our paper Metameric Varifocal Holograms we explore how hologram optimisation can be improved by only optimising the holograms to match image content that the user can really perceive. We do this using a metameric loss function, and by reconstructing varifocal holograms, 2D planar holograms correct at the user’s current focal depth.

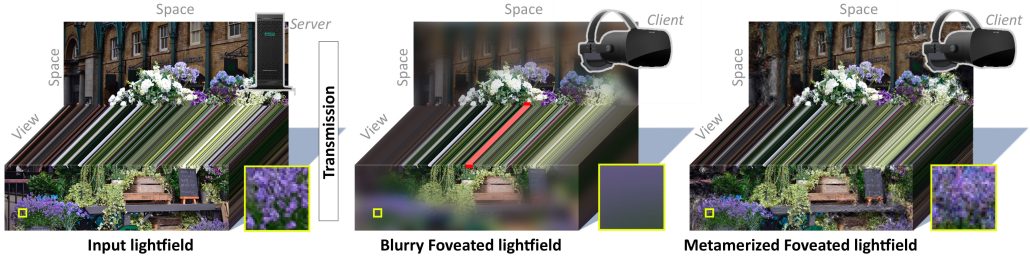

In Metameric Light Fields we extend metamer generation from 2D images to 3D light fields. This needs special consideration to give temporally consistent, high-quality results without flicker or incorrect motion.

Publications

Beyond Blur: Ventral Metamers for Foveated Rendering, ACM Trans. Graph. (Proc. SIGGRAPH 2021)

[Project Page] | [Preprint] | [Supplemental Material] | [Unity Package] | [Executable Windows Demo] | [Python Example]

To peripheral vision, a pair of physically different images can look the same. Such pairs are metamers relative to each other, just as physically-different spectra of light are perceived as the same color. We propose a real-time method to compute such ventral metamers for foveated rendering where, in particular for near-eye displays, the largest part of the framebuffer maps to the periphery. This improves in quality over state-of-the-art foveation methods which blur the periphery. Work in Vision Science has established how peripheral stimuli are ventral metamers if their statistics are similar. Existing methods, however, require a costly optimization process to find such metamers. To this end, we propose a novel type of statistics particularly well-suited for practical real-time rendering: smooth moments of steerable filter responses. These can be extracted from images in time constant in the number of pixels and in parallel over all pixels using a GPU. Further, we show that they can be compressed effectively and transmitted at low bandwidth. Finally, computing realizations of those statistics can again be performed in constant time and in parallel. This enables a new level of quality for foveated applications such as such as remote rendering, level-of-detail and Monte-Carlo denoising. In a user study, we finally show how human task performance increases and foveation artifacts are less suspicious, when using our method compared to common blurring.

Metameric Varifocal Holograms (Proc. IEEEVR 2022)

[Project Page] | [Paper] | [Video] | [Library (Hologram Optimisation)] | [Library (Metameric Loss)]

Computer-Generated Holography (CGH) offers the potential for genuine, high-quality three-dimensional visuals. However, fulfilling this potential remains a practical challenge due to computational complexity and visual quality issues. We propose a new CGH method that exploits gaze-contingency and perceptual graphics to accelerate the development of practical holographic display systems. Firstly, our method infers the user’s focal depth and generates images only at their focus plane without using any moving parts. Second, the images displayed are metamers; in the user’s peripheral vision, they need only be statistically correct and blend with the fovea seamlessly. Unlike previous methods, our method prioritises and improves foveal visual quality without causing perceptually visible distortions at the periphery. To enable our method, we introduce a novel metameric loss function that robustly compares the statistics of two given images for a known gaze location. In parallel, we implement a model representing the relation between holograms and their image reconstructions. We couple our differentiable loss function and model to metameric varifocal holograms using a stochastic gradient descent solver. We evaluate our method with an actual proof-of-concept holographic display, and we show that our CGH method leads to practical and perceptually three-dimensional image reconstructions.

Metameric Light Fields (Poster, Proc. IEEVR 2022)

[Project Page] | [Poster] | [Short Paper] | [Teaser Video]

Ventral metamers, pairs of images which may differ substantially in the periphery, but are perceptually identical, offer exciting new possibilities in foveated rendering and image compression, as well as offering insights into the human visual system. However, existing literature has mainly focused on creating metamers of static images. In this work, we develop a method for creating sequences of metameric frames, videos or light fields, with enforced consistency along the temporal, or angular, dimension. This greatly expands the potential applications for these metamers, and expanding metamers along the third dimension offers further new potential for compression.

Metameric Inpainting for Image Warping (TVCG 2022)

[Paper] | [Video] | [Code] | [Webpage]

Image-warping, a per-pixel deformation of one image into another, is an essential component in immersive visual experiences such as virtual reality or augmented reality. The primary issue with image warping is disocclusions, where occluded (and hence unknown) parts of the input image would be required to compose the output image. We introduce a new image warping method, Metameric image

inpainting — an approach for hole-filling in real-time with foundations in human visual perception. Our method estimates image feature statistics of disoccluded regions from their neighbours. These statistics are inpainted and used to synthesise visuals in real-time that are less noticeable to study participants, particularly in peripheral vision. Our method offers speed improvements over the standard structured image inpainting methods while improving realism over colour-based inpainting such as push-pull. Hence, our work paves the way towards future applications such as depth image-based rendering, 6‑DoF 360 rendering, and remote render-streaming.

People

Kaan Akşit, Rafael Kuffner Dos Anjos, Sebastian Friston, Prithvi Kohli, Tobias Ritschel, Anthony Steed, David Swapp, David R. Walton,

Acknowledgements

This project is funded by the EPSRC/UKRI project EP/T01346X.